Machine Learning Applications in Predictive Asset Maintenance

Artificial intelligence and machine learning are transforming maintenance from reactive and scheduled approaches to predictive strategies that anticipate failures before they occur. These technologies analyze vast amounts of operational data, identify subtle patterns indicating impending problems, and optimize maintenance timing to minimize costs while maximizing reliability.

The Evolution to Predictive Maintenance

Maintenance strategies have evolved through several generations. Reactive maintenance fixes equipment after failures occur, resulting in unplanned downtime and secondary damage. Preventive maintenance performs activities on fixed schedules regardless of actual condition, often replacing components prematurely while missing degradation between intervals.

Condition-based maintenance uses sensor data and inspections to trigger interventions when degradation reaches predetermined thresholds. This approach improves efficiency but still relies on simple rules rather than sophisticated predictions.

Predictive maintenance powered by machine learning represents the next evolution. These systems continuously analyze diverse data sources including sensor measurements, operational parameters, maintenance histories, and environmental conditions. Advanced algorithms detect complex patterns that indicate future failures, predict remaining useful life, and optimize maintenance timing. The result is maintenance performed just in time, balancing cost and reliability optimally.

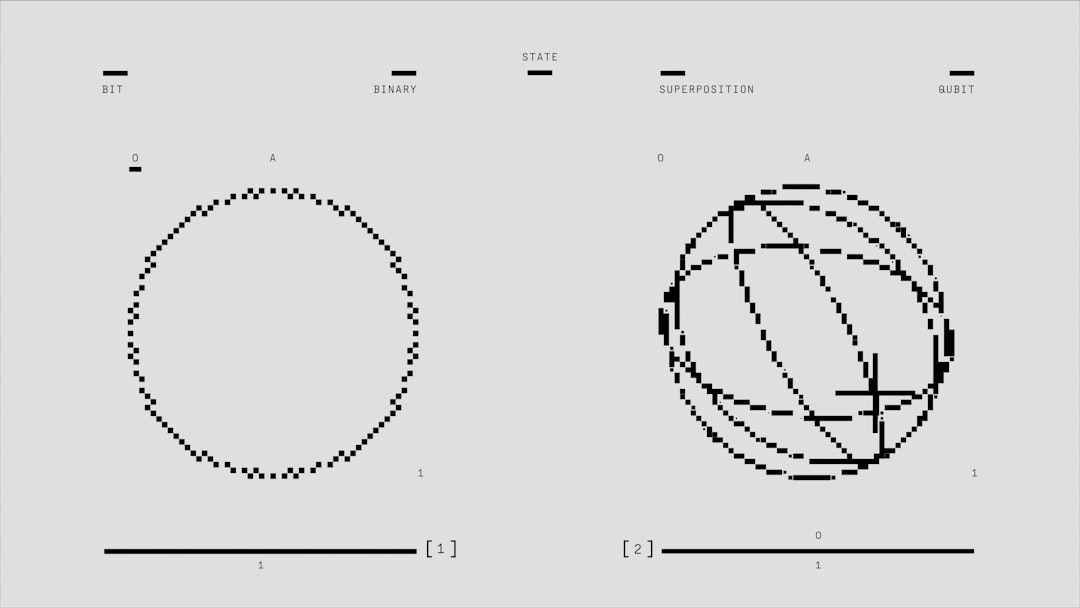

Machine Learning Fundamentals for Maintenance

Machine learning encompasses various techniques that automatically improve through experience without explicit programming. These methods learn patterns from historical data and apply learned relationships to make predictions about new situations.

Supervised Learning

Supervised learning algorithms learn from labeled training data where outcomes are known. For maintenance applications, this includes historical failure events, degradation measurements, and maintenance interventions. The algorithm identifies patterns that precede failures and uses these patterns to predict future events.

Classification algorithms predict categorical outcomes like whether a failure will occur within a specified time window. Common algorithms include logistic regression, decision trees, random forests, support vector machines, and neural networks. Each has strengths for different data characteristics and problem types.

Regression algorithms predict continuous outcomes such as remaining useful life or time to failure. Linear regression provides baseline capability while more sophisticated approaches like gradient boosting and neural networks capture complex nonlinear relationships.

Unsupervised Learning

Unsupervised learning discovers patterns in data without predefined labels. Anomaly detection identifies unusual conditions that may indicate emerging problems. Clustering groups similar operating patterns, enabling specialized models for different operational modes. Dimensionality reduction techniques compress high-dimensional sensor data into informative features.

Deep Learning

Deep learning uses multi-layer neural networks that automatically learn hierarchical feature representations from raw data. Convolutional neural networks excel at processing images and spatial data like thermal images or vibration spectrograms. Recurrent neural networks and transformers model time series data, capturing temporal dependencies in sensor measurements.

Failure Prediction Models

Predicting failures before they occur enables proactive maintenance that prevents unplanned downtime. Machine learning approaches to failure prediction range from simple statistical models to sophisticated deep learning architectures.

Binary Classification for Failure Prediction

The simplest approach frames failure prediction as binary classification: will the asset fail within the next time period? Random forest classifiers have proven particularly effective for this task, handling diverse feature types, capturing nonlinear relationships, and providing feature importance rankings.

Training requires historical data including sensor measurements and operational parameters prior to failures along with normal operation data. The model learns patterns that differentiate pre-failure conditions from healthy states. Performance metrics include precision, recall, and area under the ROC curve, each emphasizing different aspects of prediction quality.

Survival Analysis

Survival analysis techniques from medical research adapt well to asset failure prediction. Cox proportional hazards models relate failure rates to asset characteristics and operating conditions. These models handle censored data where some assets have not yet failed, maximizing information from available data.

Extensions like random survival forests combine survival analysis with ensemble learning for improved prediction accuracy. These models predict entire survival curves showing failure probability over time rather than binary outcomes.

Time Series Forecasting

Time series models forecast future degradation trajectories from historical sensor data. LSTM networks capture long-term dependencies in degradation processes. Attention mechanisms identify which historical observations are most relevant for predictions. Ensemble approaches combine multiple forecasting models for robustness.

Anomaly Detection Systems

Anomaly detection identifies unusual patterns that may indicate emerging problems. These systems continuously monitor asset behavior and alert when conditions deviate from normal patterns.

Statistical Process Control

Traditional statistical process control establishes baseline normal operating ranges and triggers alerts when measurements exceed control limits. Multivariate extensions handle correlated sensor measurements. Machine learning enhances these methods by adaptively learning normal patterns rather than relying on fixed thresholds.

Autoencoder Networks

Autoencoders are neural networks trained to compress and reconstruct normal operating data. When processing new data, reconstruction error indicates how different the new data is from learned normal patterns. Large reconstruction errors signal anomalies requiring investigation.

Variational autoencoders provide probabilistic frameworks that quantify uncertainty in anomaly scores. This uncertainty quantification helps distinguish true anomalies from normal variation.

Isolation Forests

Isolation forests identify anomalies by randomly partitioning data space. Anomalies are isolated with fewer partitions than normal points since they differ in multiple dimensions. This approach handles high-dimensional data efficiently and requires no labeled anomalies for training.

Remaining Useful Life Prediction

Remaining useful life prediction estimates how much longer an asset can operate before failure. These predictions enable planning maintenance at optimal times that balance operational needs with cost considerations.

Degradation Modeling

Degradation models track how asset condition evolves over time. Physics-based models represent known failure mechanisms mathematically. Data-driven models learn degradation patterns from historical condition measurements. Hybrid approaches combine physics and data for improved accuracy and interpretability.

Recurrent Neural Networks

LSTM and GRU networks process sequential condition measurements to predict remaining life. These networks capture complex temporal dependencies and handle varying sequence lengths. Attention mechanisms identify which historical observations most strongly influence current degradation state.

Probabilistic Predictions

Uncertainty in remaining life predictions is substantial, especially for long horizons. Probabilistic approaches quantify this uncertainty rather than providing misleading point estimates. Bayesian neural networks and quantile regression produce prediction intervals showing ranges of possible remaining lives at different confidence levels.

Optimal Maintenance Scheduling

Predicting failures and remaining life provides inputs to maintenance optimization that determines when interventions should occur. Machine learning enhances optimization by improving input accuracy and enabling more sophisticated scheduling algorithms.

Condition-Based Scheduling

Reinforcement learning addresses maintenance scheduling as sequential decision problems. The agent observes current asset conditions, chooses maintenance actions, and receives rewards based on resulting costs and reliability. Through trial and error, the agent learns policies that maximize long-term rewards.

Deep Q-networks and policy gradient methods handle complex state spaces with many assets and actions. These approaches discover scheduling policies that account for operational constraints, cost structures, and risk preferences.

Multi-Asset Optimization

Maintaining multiple assets creates opportunities for coordination that reduces costs. Machine learning identifies which assets should be maintained together to minimize downtime and mobilization costs. Clustering algorithms group assets by operational patterns and degradation behaviors. Graph neural networks model dependencies between assets in networked systems.

Feature Engineering for Maintenance

Effective machine learning requires informative features that capture relevant patterns. Feature engineering transforms raw sensor data into representations suitable for learning algorithms.

Time Domain Features

Statistical summaries of time domain signals provide simple but informative features. Mean values indicate baseline operating levels. Standard deviations measure variability. Skewness and kurtosis capture distribution shapes. Trend analysis detects drift over time.

Frequency Domain Features

Fourier transforms decompose vibration signals into frequency components. Specific frequency bands correspond to particular failure modes like bearing defects or gear tooth damage. Spectral analysis identifies shifts in frequency content indicating developing problems.

Wavelet Features

Wavelet transforms provide time-frequency representations capturing transient events and non-stationary behavior. These features are particularly valuable for detecting impacts, cracks, and other localized defects.

Deep Feature Learning

Deep learning automatically discovers useful features from raw data, reducing manual engineering effort. Convolutional layers learn spatial features. Recurrent layers capture temporal patterns. Transfer learning leverages features learned on related datasets.

Implementation Challenges and Solutions

Despite enormous potential, implementing machine learning for predictive maintenance presents challenges that require thoughtful approaches.

Data Quality and Availability

Machine learning requires substantial high-quality training data. Many organizations lack sufficient failure histories for reliable model training. Solutions include transfer learning from similar assets, synthetic data generation, and semi-supervised learning that leverages unlabeled data.

Class Imbalance

Failures are rare events creating severe class imbalance where normal operation data vastly outnumbers failure examples. This imbalance causes models to achieve high accuracy by simply predicting no failures. Techniques addressing this include oversampling minority classes, undersampling majority classes, and cost-sensitive learning that penalizes false negatives more heavily.

Model Interpretability

Complex machine learning models often operate as black boxes, making their predictions difficult to explain. This lack of transparency hinders trust and regulatory acceptance. Explainable AI techniques including SHAP values and attention visualizations provide insights into model reasoning. Simpler models like decision trees offer inherent interpretability at some accuracy cost.

Concept Drift

Operating conditions and failure patterns evolve over time, causing model performance degradation. Continuous monitoring detects performance drift. Adaptive learning updates models as new data arrives. Ensemble approaches combining recent and historical models provide robustness.

Case Study: Industrial Pump Failure Prediction

A manufacturing facility implemented machine learning predictive maintenance for centrifugal pumps critical to operations. Historical data included three years of vibration measurements, temperature readings, flow rates, and 47 recorded failures.

Feature engineering extracted 180 features from raw sensor data including statistical summaries, frequency domain characteristics, and wavelet coefficients. Random forest classification predicted failures with 92% precision and 85% recall at a two-week prediction horizon. The model identified bearing wear and impeller damage as primary failure modes.

Implementation reduced unplanned downtime by 60% and decreased maintenance costs by 35% through better spare parts inventory and scheduling. The system now monitors 200 pumps across multiple facilities with continuous model updating.

Future Directions

Machine learning for predictive maintenance continues evolving rapidly. Edge computing enables real-time inference on asset-embedded processors. Digital twins combine physics models with machine learning for improved predictions. Federated learning trains models across distributed assets while preserving data privacy. Generative models create synthetic failure data for training.

Conclusion

Machine learning has matured from research curiosity to practical technology delivering substantial value in predictive maintenance applications. Organizations implementing AI-powered maintenance strategies report significant reductions in downtime and costs while improving safety and reliability. Success requires high-quality data, appropriate algorithms, careful feature engineering, and thoughtful deployment considering organizational context.

AssetAnalytics Online incorporates state-of-the-art machine learning capabilities in accessible tools designed for asset management professionals. Our platform provides pre-trained models, automated feature engineering, and guided deployment workflows that accelerate implementation while ensuring reliable results.